The Campus Labs system Lehigh is using for student engagement is called “LINC” and it sucks. There, I said it. It’s a bloated, confusing React app that hinders club activities on campus. I say that having been a club president trying to administer a LINC page and now I need to contact all of the clubs to get their info for the yearbook.

I was able to get a CSV file from an administrator with the email addresses of all the club presidents, but not with their respective clubs.

I need to email all of the clubs to get them to submit photos and info to the yearbook, and my generic call to action mail-merge isn’t having great results.

So, let’s get that information ourselves.

First we need a list of all the clubs

That’s relatively easy. Just gotta scroll down through https://lehigh.campuslabs.com/engage/organizations repeatedly clicking the “load more” button at the bottom to generate a list of all the currently active clubs.

Then we can download the HTML.

Get the clubs URLs out of the HTML

This is what a single club’s entry looks like (There are around 350 clubs at Lehigh), have they never heard of classes?

<div>

<a href="/engage/organization/marching97" style="text-decoration: none;">

<div tabindex="-1" style="color: rgba(0, 0, 0, 0.870588); transition: all 450ms cubic-bezier(0.23, 1, 0.32, 1) 0ms; box-sizing: border-box; font-family: "Source Sans Pro", sans-serif; box-shadow: rgba(0, 0, 0, 0.117647) 0px 1px 6px, rgba(0, 0, 0, 0.117647) 0px 1px 4px; border-top-left-radius: 2px; border-top-right-radius: 2px; border-bottom-right-radius: 2px; border-bottom-left-radius: 2px; z-index: 1; height: 100%; border: 10px; display: block; cursor: pointer; text-decoration: none; margin: 0px 0px 20px; padding: 0px; outline: none; font-size: 16px; font-weight: inherit; position: relative; line-height: 16px; background-image: none; background-position: initial initial; background-repeat: initial initial;">

<div style="padding-bottom: 0px;">

<div>

<div style="margin-left: 0px; padding: 15px 30px 11px 108px; position: relative; background-color: rgb(255, 255, 255); height: 82px; overflow: hidden;">

<img alt="" size="75" src="https://images.collegiatelink.net/clink/images/c0489b78-ab1d-440e-a2e6-9f5d7bd331810db2b1fd-31d2-489f-acec-9202a9e0d090.png?preset=small-sq" style="color: rgb(255, 255, 255); background-color: rgb(188, 188, 188); display: inline-flex; align-items: center; justify-content: center; font-size: 37.5px; border-top-left-radius: 50%; border-top-right-radius: 50%; border-bottom-right-radius: 50%; border-bottom-left-radius: 50%; height: 75px; width: 75px; position: absolute; top: 9px; left: 13px; margin: 8px; background-size: 55px;">

<div style="font-size: 18px; font-weight: 600; color: rgb(73, 73, 73); padding-left: 5px; text-overflow: ellipsis; margin-top: 5px;"> Marching 97 </div>

<p class="DescriptionExcerpt" style="font-size: 14px; line-height: 21px; height: 45px; margin: 15px 0px 0px; color: rgba(0, 0, 0, 0.541176); overflow: hidden; text-overflow: ellipsis; display: -webkit-box; -webkit-line-clamp: 2; -webkit-box-orient: vertical; padding-left: 5px;">Since 1906, the Marching 97 has been a part of Lehigh with its signature leg-liftery, sing-singery, and a unique brand of spirit called psyche! The Marching 97 performs a new student-written show during halftime at every performance.</p>

</div>

</div>

</div>

</div>

</a>

</div>

What we need is each club’s unique URL, which is embedded somewhere inside this hellscape of React output.

/engage/organization/marching97

A little regex can help us do that:

\/engage\/organization\/[^"]*

I like building Regex on regexr.com.

Turn that in to a full URL

Now we’ve got all of the paths from

AlphaOmegaEpsilon to zoellnerartscenter stored in All_Orgs.txt. Time to turn those in to full URLs with a quick perl script.

perl -e 'while (<>) {print "https://lehigh.campuslabs.com$_"}' < All_Orgs.txt > All_Orgs_full.txt

Set up our Python Enviroment

In 4 easy steps:

mkdir scrapervirtualenv venvpip install beautifulsoup4source venv/bin/activate

Determine what needs to be scraped

The anatomony of each club page looks like this:

<!DOCTYPE html>

<head>

<script>Google Analytics</script>

<script>Various other things</script>

<link rel="stylesheet" href="some_css_file.css">

</head>

<body>

<div id="react-app"></div>

<script>window.initialAppState={LOTS OF GOOD STUFF HERE}</script>

</body>

</html>

This is a React app, so when we download each page it doesn’t look like pretty HTML, instead all of the info is stored in a JSON array called, “initialAppState” which React then renders into the actual content in your browser.

It’s full of good stuff, lets tear apart the page for the a cappella group A Whole Step Up:

(I’ve removed some items for the sake of suicintness)

{

"institution": {

"id": 3928,

"name": "LINC- Lehigh Involvement Connection",

"coverPhoto": "edf6fa7f-9f57-492d-9f72-227bc6a877206c03f3f6-70e2-4fdc-b1e1-11ad2ada0a88.png",

"src": null,

"accentColor": "#11b875",

"config": {

"institution": {

"name": "Lehigh University",

"campusLabsHostUrl": "https://lehigh.campuslabs.com/engage/"

},

"communityTimeZone": "America/New_York",

"communityDisplayName": "LINC- Lehigh Involvement Connection",

"communityDirectoryEnabled": false,

"analytics": {

"enabled": true,

"property": "UA-309883-56"

}

}

},

"preFetchedData": {

"event": null,

"organization": {

"id": 26298,

"communityId": 118,

"institutionId": 3928,

"name": "A Whole Step Up",

"shortName": "A Whole Step Up",

"nameSortKey": "A",

"websiteKey": "wholestep",

"email": "Redacted",

"description": "<p>A Whole Step Up is Lehigh's best, and only, all-male a cappella group. Whole Step is dedicated to delivering high quality, comedic music into the hearts, minds, and souls of its listeners.</p>",

"summary": "A Whole Step Up is Lehigh's best, and only, all-male a cappella group. Whole-Step is dedicated to delivering high quality, comedic music into the hearts, minds, and souls of its listeners.",

"status": "Active",

"comment": null,

"showJoin": true,

"statusChangeDateTime": null,

"startDate": "1969-12-31T00:00:00+00:00",

"endDate": null,

"parentId": 26237,

"wallId": 32888,

"discussionId": 32888,

"groupTypeId": null,

"organizationTypeId": 941,

"cssConfigurationId": 3782,

"deleted": false,

"enableGoogleCalendar": false,

"modifiedOn": "0001-01-01T00:00:00+00:00",

"socialMedia": {

"externalWebsite": "",

"flickrUrl": "",

"googleCalendarUrl": "",

"googlePlusUrl": "",

"instagramUrl": "",

"linkedInUrl": "",

"pinterestUrl": "",

"tumblrUrl": "",

"vimeoUrl": "",

"youtubeUrl": "",

"facebookUrl": "https://www.facebook.com/awholestepup",

"twitterUrl": null,

"twitterUserName": null

},

"profilePicture": "d56fb8db-2533-41c4-b7c6-7e56b9b5b8c1f471c68d-8e54-4654-9236-31310cb6246d.jpg",

"organizationType": {

"id": 941,

"name": "Student Senate Recognized Organization"

},

"primaryContact": {

"id": "Redacted",

"firstName": "Garrett",

"preferredFirstName": null,

"lastName": "Redacted",

"primaryEmailAddress": "Redacted",

"profileImageFilePath": null,

"institutionId": 3928,

"privacy": "Unselected"

},

"isBranch": false,

"contactInfo": [{

Redacted

}]

},

"article": null

},

"imageServerBaseUrl": "https://images.collegiatelink.net/clink/images/",

"serverSideRender": false,

"baseUrl": "https://lehigh.campuslabs.com",

"serverSideContextRoot": "/engage",

"cdnBaseUrl": "https://static.campuslabsengage.com/discovery/2018.4.13.4"

}

Retrieve the Info with Python

Since we’ve got the all of the URLs stored in a file, I think it makes the most sense to iterate through the file with a bash script and pass in the URL to scrape as a command line argument to a python script.

Usually with BeautifulSoup we could just specify what element we wanted by class or id, but unfortunately there’s nothing identifying this beyond the script tag.

I’m going to assume that all of these pages are laid out identically and that the JSON is always located in the 5th script tag on the page.

response = simple_get(url)

html = BeautifulSoup(response, 'html.parser')

# If we got a successful response....

if response is not None:

# There are multiple <script> tags containing things like Google Analytics

# the React client library, and some other stuff, but we only care about the

# one with the initialAppState JSON array in it

script_sections = html.find_all('script')

print(script_sections[4].text)

Next we save that element:

json_raw = script_sections[4].text

So the variable json_raw now looks like this:

window.initialAppState = {...};

Now we’ve got to slice off everything on either end of the curly brackets, and parse the json into a format python understands.

json_raw = json_raw[25:-1]

club_info = json.loads(json_raw)

And we’ve got our JSON array!

Write the relevant info to a CSV file

Now that we’ve identified the relevant fields all we have to do is pull everything we want out of the variable holding the JSON!

fields = [

club_info['preFetchedData']['organization']['primaryContact']['primaryEmailAddress'],

club_info['preFetchedData']['organization']['primaryContact']['firstName'],

club_info['preFetchedData']['organization']['socialMedia'].get('facebookUrl', ''),

]

with open('clubs.csv', 'a') as csvfile:

club_writer = csv.writer(csvfile)

club_writer.writerow(fields)

Not all of the clubs have all of the various social media fields in their JSON array, so using .get returns nothing instead.

Make a bash script to do this for all the clubs!

- get_clubs.sh

#!/bin/bash

source venv/bin/activate

while read p;

do

python scraper.py ${p}

done < All_Orgs_full.txt

- chmod +x get_clubs.sh

Full code and results:

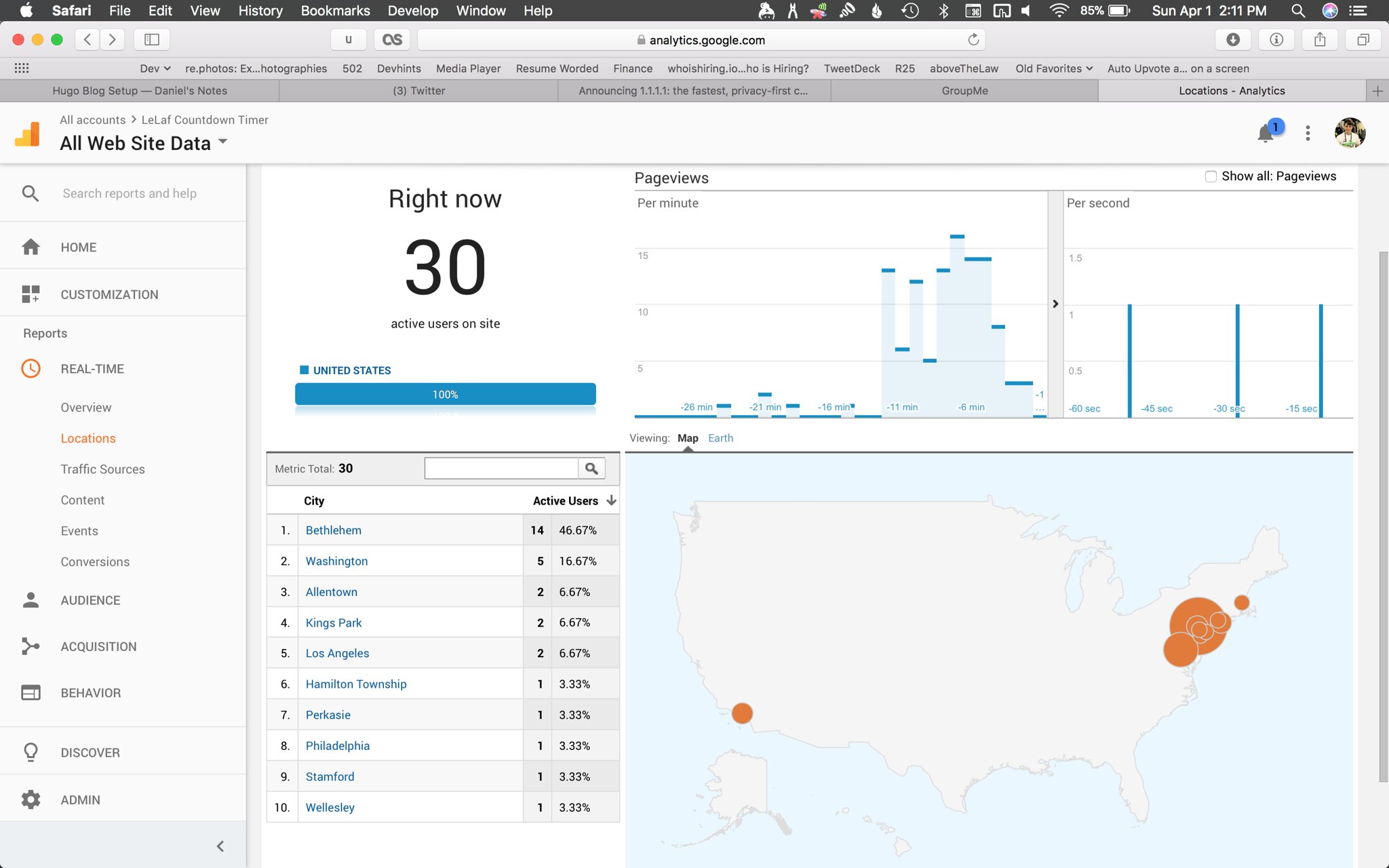

Looks like it worked! :-)

Evan C

Evan C